[cs231n_review#Lecture 2-3] Setting Hyperparameters

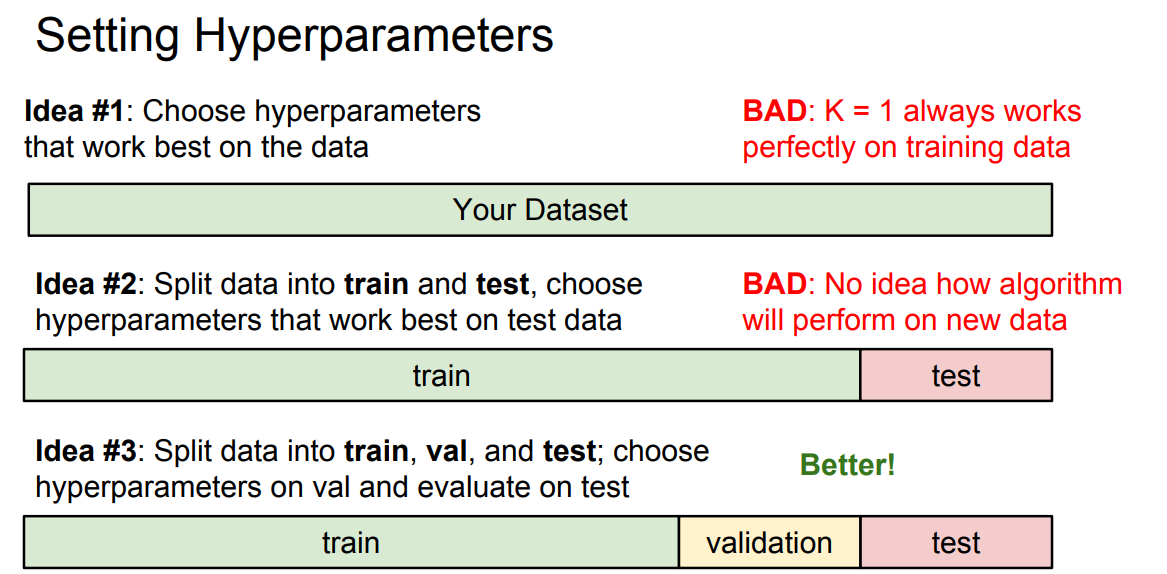

idea #1

if we use this strategy we'll always pick K=1, in practice it seems that setting K equals to larger values might cause us to misclassify some of the training data, but, in fact, lead to better performance on points that were not in the training data. we don't care about fitting the training data, we really care about how our classifier, or how our method, will perform on unseen data after training. So, this is a terrible idea, don't do this.

idea #2

the point of the test set is to give us some estimate of how our method will do on unseen data that's coming out from the wild. And if we use this strategy of training many different algorithms with different hyperparameters, and then, selecting the one which does the best on the test data, then, it's possible, that we may have just picked the right set of hyperparameters that caused our algorithm to work quite well on this testing set, but now our performance on this test set will no longer be representative of our performance of new, unseen data.

idea #3

And now what we typically do is go and train our algorithm with many different choices of hyperparameters on the training set, evaluate on the validation set, and now pick the set of hyperparameters which performs best on the validation set. ...

then you'd take that best performing classifier on the validation set and run it once on the test set. And now that's the number that goes into your paper, that's the number that goes into your report, that's the number that actually is telling you how your algorithm is doing on unseen data.

And this is actually really, really important that you keep a very strict separation between the validation data and the test data. So, for example, when I'm writing papers, I tend to only touch the test set for my problem in maybe the week before the deadline or so to really insure that we're not being dishonest here and we're not reporting a number which is unfair.